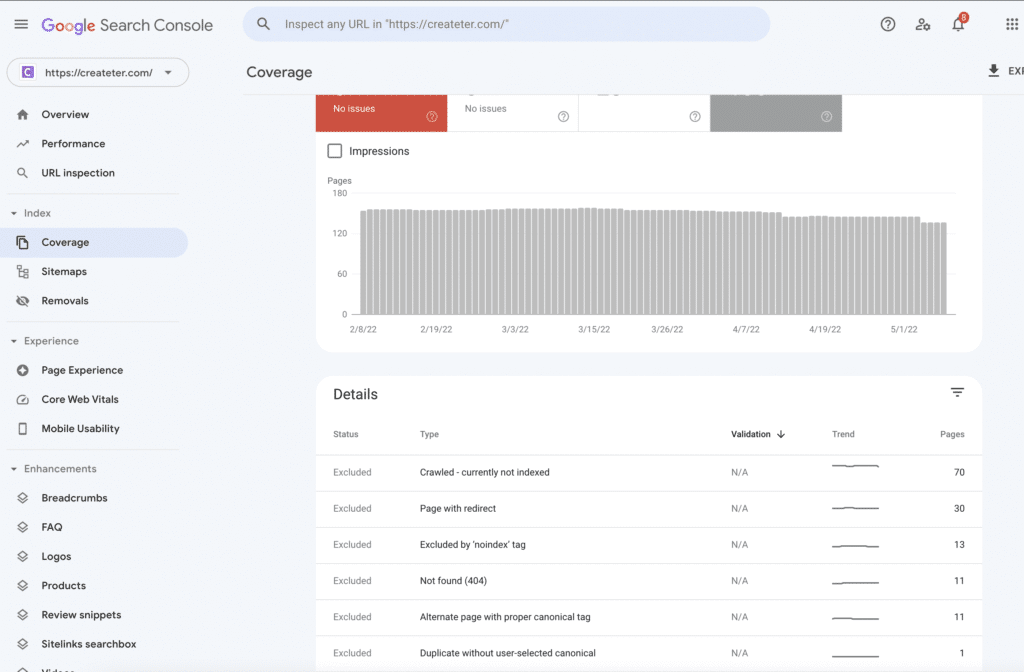

Where to find the Discovered – currently not indexed status

Discovered – currently not indexed the issue types in the Index Coverage report in Google Search Console (GSC) are currently not indexed. The status of pages on your website is displayed in the report, including crawling and indexing.

The URLs that are currently not indexed, but which Google believes is the consequence of an mistake, are listed in the Excluded group.

You may see a list of impacted URLs by clicking on the kind of problem in Google Search Console.

It’s possible that you intended to exclude some of the listed URLs from the index – that’s OK. Nonetheless, if any of your important pages haven’t been indexed, you should check what problems Google has discovered.

Before moving on to the features of Discovered, which are currently not indexed and deal with this problem, let’s clarify what it takes for a website to be crawled: Internal or external links, as well as XML sitemaps that must include all pages that should be indexed, are the most typical ways to discover URLs.

Google explores the pages it crawls by reading them and verifying their contents. Several crawling issues that websites encounter are due to the fact that Google does not have the resources to crawl all of the pages it finds.

Google reads the contents of websites as part of its indexing process and ranks them. To appear in search results and get organic traffic from Google, your site must be indexed. In response to search queries users enter in Google, indexed pages are evaluated based on a variety of ranking criteria.

Due to the limited capacity of Google’s resources, the ever-increasing web, and Google’s expectation of a certain level of quality from pages that it indexes, getting indexed by Google is difficult. Your pages may not be crawled or indexed due to a variety of technical and content-related factors.

These are a few of the most common examples:

- Content quality issues – Tools Help Create High Quality Content

- Outdated content (like old blog articles)

- Duplicate content (Duplicate content Checker)

- Auto-generated content

- User-generated content

- poor internal linking structure

- Adding contextual links in your content

- Linking pages based on their hierarchy,

- Avoiding spammy links and over-optimizing anchor text placement

- Incorporating links to related products or posts

Google was having issues crawling your site because it appeared to be overloaded, If it is the case, contact your hosting provider.

internal linksGoogle intend to crawl the site, but the crawl was postponed because it was expected to strain the server.

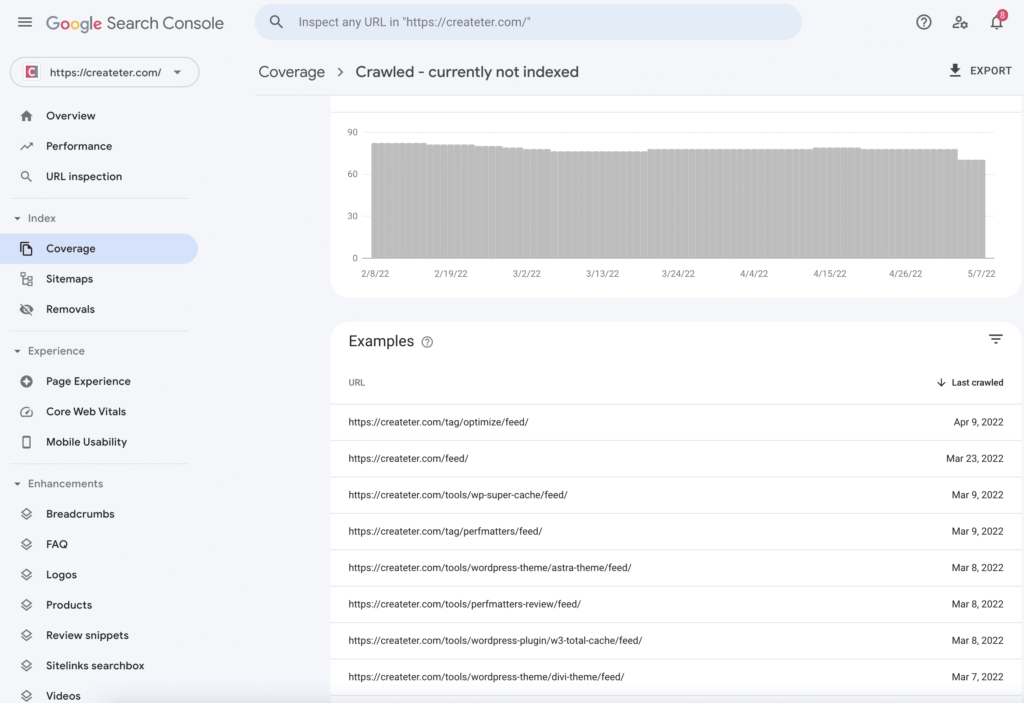

As a result, the report’s last crawl date is blank. Crawled – currently not indexed new website usually duplicate content, soft 404s, and crawl issues are among the most common indexing problems reported in google search console by source: google’s index coverage report by Tomek Rudzki.

Google is never going to index pages that have number of pages < 10. But is too much content, and Google isn’t able to crawl it all now. They believe it isn’t worth their effort.

Recommended to have at least 15 – 30 post, publish few articles a day not all in once.

If you want Google to crawl and index your material, you can correct this by removing URls to it and updating your robots.txt file.

You may do one of two things below:

1. Poorly written or formatted website – Your website needs to be well-organized and formatted to look good and be easily readable by search engines. Make sure all your text is properly written, and that your pages are easy to navigate.

2. Broken links – Broken links can prevent your website from being found by search engines. If you notice any broken links on your website, please click on “Report a broken link” in the lower right corner of our toolbar and we’ll take a look for you.

3. Not using keywords – Your website should include keywords that are relevant to your niche and topic. By including these keywords in your site’s title, header, and other places, you will help improve your site’s ranking in Google search results.

4. Inappropriate or spammy content – If you notice any spammy or inappropriate content on your website, please click on “Report spam” in the lower right corner of our toolbar and we’ll take a look for you. Spamming includes stuff like low-quality articles, advertisements, or software downloads that are not related to your site’s content or theme.

To discover other pages and understand the connections between them, googlebot follows internal linking problems on your site. As a result, make sure that your most important pages are linked internally on a regular basis.

source: john mueller

Although they are linked, these two statuses are often confused, and they mean different things. The URLs haven’t been indexed in either scenario, but Google has already accessed the page because Crawled isn’t indexed.

The page has been discovered by Google but hasn’t been crawled since it was indexed as “Discovered.” An indexing delay, poor content quality, site structure issues, or a page may have been deindexed are all possible reasons for why a webpage is currently indexed.

When the crawler fails to find pages that are not indexed, it will make an error of 404 status which means they don’t exist on your website.

The result is Google webmaster facing issues with their crawling and indexing efforts affecting search ranking of affected pages in SERPs.

Depending on the size of a website, the impact of a discovered section that is currently not indexed may vary.

he discovered – currently not indexed status will often resolve itself if you have a smaller website (less than 10k urls) and your pages have good quality, unique content. Google may be having no problems at all, or it may simply have not crawled the specified URLs yet.

Search engines noindex tags

There could be a few reasons why the search engine would put a noindex tag on your page. Possible causes could be:

The content is duplicate of other pages on the website

The content does not meet the quality guidelines set by Google (for example, it might contain spam or malware)

There are legal reasons preventing Google from indexing your page

It’s probable that a lot of the new contentwill be found and not indexed for a while on your newer website. It then gradually shifts, usually over time.

This E-A-T is a guideline Google uses to determine the content is high-quality and should be ranked higher in SERP.

Also, Google Crawl rate is usually at 50% of your post frequency. Google crawlers visit your site four times each day if you publish two posts each day.

Some individuals post 10-20 times, but are dissatisfied when their posts do not appear in search results and are not ranked. You’ll have a greater crawl and index rate than most other websites if you publish at least one post every day for three to four months.

The issue “currently not indexed” might be due to the fact that your website simple as an incorrect URL or your site is too new. Below are the 2 solution we providing to try!

Backlinks are one of the most important factors when it comes to SEO. Not only do they help your site rank higher in search engine results, but they can also help your site get found from other websites.

The downside is may be slow and costly for getting backlink high DR blogs relevant niche.

I recommend you to post high quality content every day from now on. For my new website post 3 times each day, at 8-hour intervals, and I would submit the link to GSC when each piece of content went live. After 2 week Google index my articles within few hours.

How do I fix discovered currently not indexed?

If you have discovered a page on your website that is currently not indexed by Google, there are a few things that you can do to get it back up and running.

First, you can check to see if the page has any errors or typos. This is often the first place that Googlebot flags pages as not being up to date. If the page is error-free, you can try to improve your search engine optimization (SEO) efforts.

This can involve adding more keywords to your content, optimizing your website for mobile devices, and making sure your website is easy to find on the web.

If all of these efforts fail, you can consider submitting your page for manual indexing.

This process can take some time, but it is often worth it if you want your page to rank higher in Google. So, don’t hesitate to contact us if you have any questions or concerns about fixing discovered not indexed pages.

Why is my page not getting indexed?

There are a few things that can cause your page to not be indexed by Google. Firstly, if your page is not optimized for the search engines, it will not be found. Secondly, your page may not be relevant to the search terms that are being used.

If the keywords that are being used are not appearing in your page title, meta description, and other elements of your website, then your page is likely not being indexed.

If you are not getting any traffic from the search engines, it may be worth trying out different keywords and modifying your website’s content to be more relevant.

It may also be worth conducting a search term analysis to see where your audience is looking for information.

If you are still experiencing difficulty indexing your page, it may be time to consider read up Google Search Central or consulting a SEO specialist

Why is my post not indexing?

There are a few reasons why a post may not be indexing, and the most common reason is because it does not have the correct keyword density.

To determine the correct keyword density, you need to calculate the percentage of total words in your post that are related to your target keyword. For example, if your target keyword is “dog breeds”, and your post has 220 words, your keyword density would be 0.22 (22%).

If you are not seeing your post ranking in the Google search results for your target keyword, it is likely because it does not have the correct keyword density.

You can try to adjust your keyword placement or add more keywords to your post to improve indexing. You can also use Google AdWords or other paid advertising to promote your post and increase its visibility.

What does non indexed mean?

Non indexed pages are not found in the search engines. This means that they are not listed in the search results, and as a result, they are not seen by potential customers. Non indexed pages can be created in a number of ways, including: not including your website in the search engine algorithms, using hidden text and meta data, or cloaking your website.

The benefits of non indexed pages are twofold. First, they are less likely to be discovered by potential customers, as they are not being shown in the search engine results. Second, as they are n be penalized by Google. This can lead to improved ranking and increased traffic.

If you are concerned about the ranking of your website or you suspect that it has been penalized by Google, it is important to contact a Google SEO specialist to ensure that your pages are correctly indexed and optimized for search engines.ot being seen, they are less likely to

Content quality and crawl budget issues are often the source of discovered – currently not indexed. You may have to go through many aspects of your pages and optimize them in order to fix these issues and help Google efficiently and accurately crawl your pages in the future.

Robots.txt can help you avoid issues with Discovered, which is now not indexed, by preventing Googlebot from indexing low-quality pages with duplicate content, such as pages generated by filters or search boxes on your site.

Take care to build a custom sitemap that Google may utilise to find your pages. Make sure that your important pages are linked internally and that your site architecture is preserved. To prioritize the pages that are most important to you, create an indexing plan.