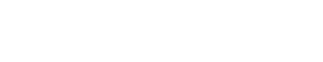

Launching a new website is an exciting venture for any business, but it can also come with its fair share of challenges. One common issue that many new website owners face is the “Crawled – currently not indexed” status on Google Search Console.

The issue of “Crawled – currently not indexed” on Google Search Console usually occurs when Google has visited the website but has chosen not to include it in their index. This can be due to reasons such as duplicate or low-quality content, technical issues, or a new website that hasn’t been fully indexed yet. To resolve this issue, it is important to identify and address the underlying cause, such as improving content quality, fixing technical issues, or ensuring a clear site structure.

Key takeaways:

- The “Crawled – currently not indexed” status means that Google has visited your website but has chosen not to include it in their index.

- Reasons for non-indexing include duplicate or low-quality content, technical issues, or being a new website that hasn’t been fully indexed yet.

- To resolve the issue, focus on improving content quality, addressing technical issues, and ensuring a clear site structure.

Explanation of the issue with the new website not being indexed

The “Crawled – currently not indexed” status means that while Google has crawled or visited your site, it has chosen not to include it in its index. This can happen for various reasons, such as duplicate content, low-quality content, or technical issues on the site.

Understanding the root cause is crucial to resolving this issue. Duplicate content can confuse search engines and make it difficult for them to decide which version to index. Low-quality content might not provide enough value to users, leading search engines to exclude it from their index.

Technical issues can also prevent a website from being indexed. These could include problems with the site’s robots.txt file, which tells search engines which pages to crawl and which to ignore, or issues with the site’s sitemap, which provides a roadmap of your site for search engines.

To fix this issue, you’ll need to identify and address the underlying cause. This might involve improving your content quality, resolving any technical issues, or ensuring that your site’s structure is easy for search engines to understand.

Remember, patience is key when launching a new website. It can take time for search engines to crawl and index your site fully. So don’t be discouraged if you don’t see immediate results. Keep focusing on creating high-quality content and providing a great user experience, and you’ll start seeing improvements in your site’s indexing status soon.

Importance of Indexing

Indexing is a key process in the world of search engine optimization (SEO). It’s the method by which search engines like Google store and retrieve data when a user types a query into the search bar.

Why indexing is crucial for a website’s visibility and search engine rankings

Visibility: If a website isn’t indexed, it’s virtually invisible to search engines. This means that no matter how well-designed or informative the site may be, it won’t appear in search results.

Search Engine Rankings: Indexing also plays a vital role in determining where a website ranks on search engine results pages (SERPs). The more often a site is crawled and indexed, the higher its chances of ranking well.

However, it’s not uncommon for new websites to encounter an issue known as ‘Crawled – currently not indexed’. This means that while Google’s bots have crawled the site, they have not yet indexed it. This could be due to various reasons including technical errors, low-quality content or because the site is new and Google hasn’t had time to index it yet.

To fix this issue, one can leverage SEO tools like Rank Math, which offers solutions for optimizing your website’s crawlability and indexability. Ensuring your website is easily crawlable and indexable will help improve your visibility on SERPs and boost your overall SEO performance.

Remember, a well-indexed website is more likely to reach its target audience and achieve its business goals. So, don’t underestimate the power of proper indexing!

Reasons for Non-Indexing

In the digital world, having a website that is not indexed by search engines is akin to opening a store in the middle of a desert. No matter how visually appealing or content-rich your website is, if it’s not indexed, it’s virtually invisible to online users.

Common reasons why a website may not be indexed by search engines

- Technical Errors: Technical issues such as incorrect robots.txt file, server errors, or non-index meta tags can prevent search engines from indexing your site.

- Poor Content Quality: Search engines like Google prioritize high-quality content. If your site has thin or duplicate content, it may not get indexed.

- Website Structure: A complex or confusing website structure can make it difficult for search engine bots to crawl and index your site.

- New Website: If your website is new, it may take some time before search engines index it. Patience is key here!

Here’s a quick recap:

| Reason | Explanation |

|---|---|

| Technical Errors | Incorrect robots.txt file, server errors, or non-index meta tags can prevent search engine bots from indexing your site. |

| Poor Content Quality | If your site has thin or duplicate content, it may not get indexed by search engines. |

| Website Structure | A complex or confusing website structure can make it difficult for search engine bots to crawl and index your site. |

| New Website | New websites may take some time before they get indexed by search engines. Patience is key! |

In conclusion, ensuring that your website is indexed by search engines is crucial for online visibility and success. By understanding and addressing the common reasons for non-indexing, you can increase the chances of your website being crawled and indexed by search engines such as Google.

Technical SEO Audit

For a website to gain visibility, it needs to be indexed by search engines. If a new website isn’t being indexed, it’s akin to having a billboard in the desert. No one will find it! A technical SEO audit can help identify and fix indexing issues, ensuring that the site is crawled and indexed by search engines like Google.

Steps to perform a technical SEO audit to identify and fix indexing issues

Step 1: Begin by setting up Google Search Console for the website. This tool provides insights into how Google views and indexes the site.

Step 2: Check for crawl errors. These could be preventing Google from accessing and indexing the site.

Step 3: Review the robots.txt file. This file tells search engines which pages or sections of the site they can or cannot visit.

Step 4: Look for XML sitemap issues. An XML sitemap helps search engines understand the structure of the site and find new pages to index.

Step 5: Check for duplicate content. Duplicate content can confuse search engines and may lead to some pages not being indexed.

Step 6: Review the site’s URL structure. URLs should be SEO-friendly, with clear and concise structure that helps search engines understand page hierarchy.

Step 7: Check for broken links. Broken links can harm a site’s SEO and may prevent some pages from being indexed.

Step 8: Finally, ensure that all important pages are linked to from other parts of the site. This helps search engines find and index these pages.

Remember, technical SEO audits should be performed regularly to keep your website in optimal health and ensure maximum visibility in search engine results.

XML Sitemap

Launching a new website is an exciting venture, but it’s only the beginning. The next step is to ensure that search engines like Google and Bing can find, crawl, and index your site. This is where the XML sitemap comes in.

An XML sitemap is a powerful tool that aids search engines in understanding the structure of your website. It serves as a roadmap, guiding search engine bots to all the important pages on your site.

Creating and submitting an XML sitemap to help search engines crawl and index the website

Creating an XML sitemap can be done using various tools available online. Once created, it should include all SEO relevant pages and exclude any page that doesn’t add value to your SEO efforts.

Submitting your XML sitemap is the next crucial step. This can be done through Google Search Console and Bing Webmaster Tools. After submission, it’s important to address any errors or warnings promptly.

Analyzing trends and calculating indexation rates are also part of maintaining an effective XML sitemap. This helps in understanding how well your pages are being indexed.

Addressing causes of exclusion for submitted pages is another essential task. This ensures that all valuable pages are crawled and indexed by search engines.

In conclusion, an XML sitemap is not a guarantee for immediate indexing or higher ranking, but it certainly increases your chances by making it easier for search engines to find and understand your website’s content.

Robots.txt File

For a new website, getting crawled and indexed by search engines is crucial. One of the ways to ensure this happens is by effectively using a robots.txt file. This file, residing in the root directory of your website, instructs search engine bots on which parts of your site they can or cannot access.

Understanding and optimizing the robots.txt file to allow search engine crawlers access to important pages

Optimizing the robots.txt file is key to ensuring that search engine crawlers like Googlebot, Bingbot, and others can access important pages on your site. This file enables you to guide these bots towards the pages you want them to crawl and index, while preventing them from accessing areas you’d rather keep private.

To create or edit a robots.txt file, simply type the full URL for your homepage and add /robots.txt at the end. This will allow you to manage crawl traffic effectively and prevent image, video, and audio files from appearing in Google search results.

Remember, it’s not just about getting your site crawled but ensuring that the right pages are indexed. By strategically using your robots.txt file, you can make sure that search engine bots spend their crawl budget on pages that matter most to your business.

Here’s a quick summary table:

| Task | Action |

|---|---|

| Create/Edit Robots.txt File | Type full URL for homepage and add /robots.txt |

| Manage Crawl Traffic | Use Robots.txt file |

| Prevent Media Files from Appearing in Search Results | Use Robots.txt file |

Note: Always double-check your robots.txt file before implementation to avoid blocking important pages inadvertently.

Website Speed and Performance

In the digital world, speed is everything. Whether it’s loading a webpage or indexing a site, speed matters. The speed and performance of a website can significantly impact its visibility on search engine result pages (SERPs).

The impact of website speed and performance on indexing and tips to improve it

Website speed and performance are crucial factors that search engines consider when indexing a site. A slow-loading website can hinder the crawling process, leading to fewer pages being indexed. This can result in lower visibility on SERPs, affecting the overall traffic and conversions of the site.

Improving Website Speed: There are several ways to enhance your website’s speed. One effective method is optimizing images and other media files. Large file sizes can slow down page load times significantly. Therefore, compressing these files without compromising their quality can boost your site’s speed.

Enhancing Performance: Another strategy is implementing lazy loading. This technique loads only the necessary content when a user visits a page, improving the overall user experience and website performance.

Using a Content Delivery Network (CDN): A CDN can also help improve website speed by distributing the load of delivering content.

Implementing Caching: Caching stores copies of files in a temporary storage location for quicker access upon request. This reduces the time it takes for data to be sent between the client, server, and database, thereby improving website speed.

Remember, a fast and efficient website not only enhances user experience but also aids in better indexing by search engines. It’s a win-win situation!

Backlinks and Social Signals

In the world of digital marketing, backlinks and social signals play a crucial role. They are like the lifeblood of a website’s online presence. When a new website is launched, it’s essential to ensure that it gets indexed by search engines. But how can this be achieved? The answer lies in backlinks and social signals.

How backlinks and social signals can help increase the chances of a website being indexed

Backlinks: These are links from other websites that point to your site. They are an indication to search engines that your content is valuable and relevant. The more high-quality backlinks a website has, the higher the chances of it being indexed by search engines.

Social Signals: These are interactions on social media platforms like likes, shares, retweets, comments, and even views. They indicate to search engines that your content is engaging and worth indexing.

However, it’s not just about quantity but also quality. It’s better to have fewer high-quality backlinks and genuine social interactions than a large number of low-quality ones.

Here’s a table summarizing how these two factors can help:

| Factors | How they help |

|---|---|

| Backlinks | – High-quality backlinks indicate to search engines that your content is valuable and relevant. – They increase the chances of your website being indexed by search engines. |

| Social Signals | – Social interactions on platforms like Facebook, Twitter, Instagram, etc., show that your content is engaging. – They make your website more likely to be indexed by search engines.- Genuine social interactions are more valuable than a large number of low-quality ones. |

Remember, getting your new website indexed isn’t an overnight process. It requires consistent effort in creating quality content, building high-quality backlinks, and fostering genuine social interactions.

Understanding Crawled – Currently Not Indexed

For website owners, a common issue they may encounter is the “Crawled – Currently Not Indexed” status in Google Search Console. This means that while Google’s bots have crawled the website, the pages are not yet included in Google’s index.

Why does this happen?

There are several reasons why a page might be crawled but not indexed. It could be due to technical issues like broken links or server errors, poor quality content, or even because the page is blocked from indexing by the robots.txt file.

How to increase website crawling and indexing?

Improve Site Structure: A well-structured site with clear navigation aids Google bots in crawling and indexing.

Quality Content: High-quality, unique content is more likely to be indexed by Google.

Use of Sitemaps: Sitemaps guide search engine bots to the important pages on your site, improving their chances of being indexed.

Robots.txt: Make sure your robots.txt file isn’t blocking important pages from being crawled and indexed.

In conclusion, improving your website’s crawlability and indexability is crucial for search engine visibility. By addressing these key areas, you can increase the chances of your new website being crawled and indexed by Google.

| Action | Benefit |

|---|---|

| Improve Site Structure | Aids in easier crawling and indexing |

| Quality Content | More likely to be indexed |

| Use of Sitemaps | Guides bots to important pages |

| Check Robots.txt | Ensures pages aren’t blocked |

Remember, patience is key when it comes to SEO. It may take some time before you see your efforts reflected in Google’s index.

FAQ (Frequently Asked Questions)

Launching a new website can be an exciting venture. However, it can also bring about a myriad of questions, especially when it comes to Google Search’s crawling and indexing processes. This article aims to address some of the most frequently asked questions.

Answers to common questions related to website crawling, indexing, and SEO

What is Crawling and Indexing? Google uses software known as “web crawlers” to discover publicly available webpages. Crawling is the process by which Googlebot visits new and updated pages to be added to the Google index.

Why is my website not indexed? If your website is not indexed, it could be due to several reasons such as poor site structure, lack of high-quality backlinks, or technical issues like a robots.txt file blocking search engines.

How can I increase my website’s crawl rate? To increase your website’s crawl rate, ensure that your site has a clear navigation structure, use internal links wisely, and keep your sitemap updated.

What does ‘Crawled – currently not indexed’ mean? This status in the Google Search Console indicates that while Googlebot has crawled the page, it has chosen not to include it in its search results. This could be due to issues like low-quality content or violations of Google’s webmaster guidelines.

Here’s a table summarizing the key points:

| Question | Answer |

|---|---|

| What is Crawling and Indexing? | It’s the process by which Googlebot discovers new and updated pages to be added to the Google index. |

| Why is my website not indexed? | It could be due to poor site structure, lack of high-quality backlinks, or technical issues like a robots.txt file blocking search engines. |

| How can I increase my website’s crawl rate? | Ensure that your site has a clear navigation structure, use internal links wisely, and keep your sitemap updated. |

| What does ‘Crawled – currently not indexed’ mean? | It indicates that while Googlebot has crawled the page, it has chosen not to include it in its search results due to issues like low-quality content or violations of Google’s webmaster guidelines. |

Remember that SEO is a long-term strategy and requires patience. It may take some time before you start seeing results.